AWS Automated Vulnerability Assessment Using Nikto – Fargate+ECS Container Cluster using Lambda+API Gateway+DynamoDB

The task of maintaining an up to date and secure web platform can be challenging. Vulnerabilities are being found constantly hence we need to leverage industry tools to assist us in ensuring our systems are up to date and notify us when our systems are found to be insecure.

In this article I will showcase a set of AWS services you can leverage to automate web server vulnerability assessment using Nikto. There are many tools out there and many ways of accomplishing this task. I will show you how we can leverage docker container services within AWS to assist us in performing the scans and keeping us up to date with the status of our web services.

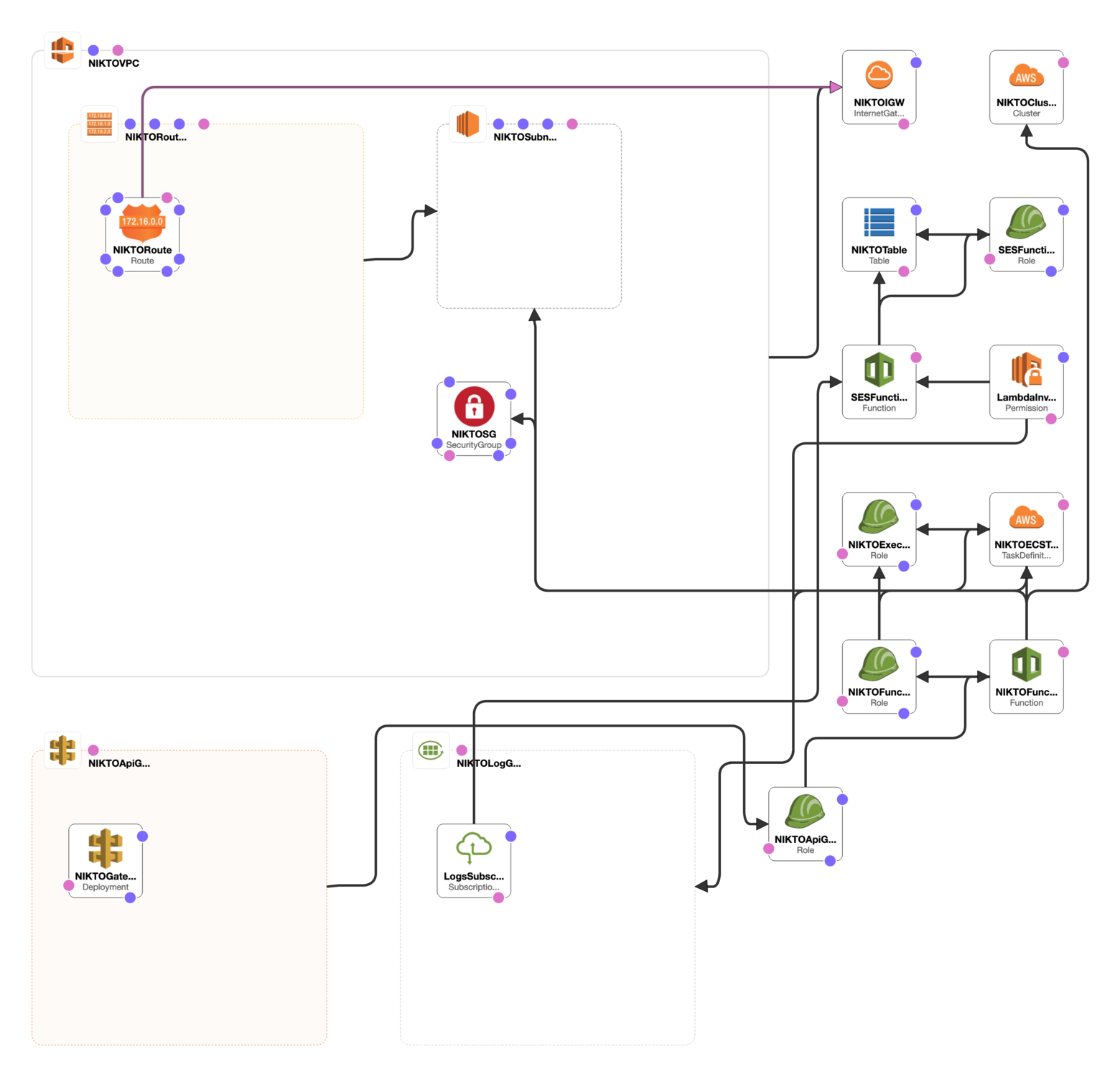

For this exercise, I will leverage a set of AWS services wrapped around container services. The underlying container will have the tools we need to scan our web services whilst taking advantage of a set of tools to assist in the automation and notification functions we need. In particular, the exercise we will deploy will consist of the following AWS services:

- ECS – Container services that will run the Nikto scan tasks. Our containers will be launched using ECS Task definitions from a Serverless Lambda function. We will create an ECS Fargate Cluster as it allows us to focus on the tasks and not the containerised infrastructure. Each task is atomic in a sense hence AWS Fargate is an ideal option. For this exercise we will use docker based image – k0st/alpine-nikto.

- VPC – Our containers will run scans over the internet and will need to be attached to an AWS VPC. The VPC will be configured with an internet gateway and enabled with public IP addresses to be able to perform the scans

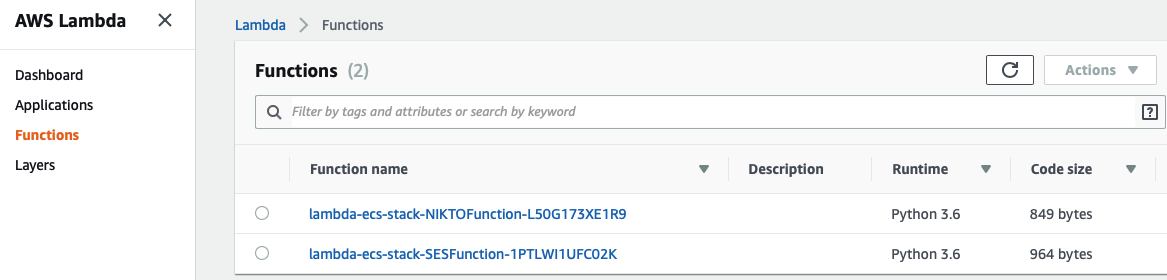

- Lambda – Our tasks will be launched using AWS Serverless Application Model (SAM). We will create a Lambda function using Python that will scan our hosts on demand using the ECS task definition (which will launch the container and perform the actual scan). We will also create a second Lambda function that will pick up the logs from the ECS task performed (i.e. the results of our scan) and dump them into a DynamoDB table and then forward them to us via a verified email we have pre-provisioned using AWS Simple Email Services (SES)

- API Gateway – To actually launch a Nikto scan task, we need some form of endpoint that will make the call to Lambda. We will use API Gateway as part of SAM that will publish an API endpoint for which we can make the call to the Lambda function specifying the host we wish to scan

- CloudWatch – ECS Tasks will be configured to dump all logs to a specific CloudWatch log group and associated log stream for each task. A subscription filter will be configured that will basically keep calling our second Lambda function which will continually dump the data into our DynamoDB table. We could have configured our logs to stream to Kinesis and subsequently into an Elastic Search cluster that will allow us to perform some further analytics, maybe we can do this another data

- DynamoDB – A table will be configured to store the results for each of our Nikto scans. Once a scan is complete, The Lambda function will send the scan results to our nominated email using SES

- SES – We want to be notified upon Nikto scan completion with the Nikto scan results. Our second Lambda function will perform this task by sending an email to us using SES

- IAM – Access control is managed through AWS IAM. Each of our functions and tasks will require permissions to perform certain tasks hence a set of IAM roles will be defined to accomplish this task

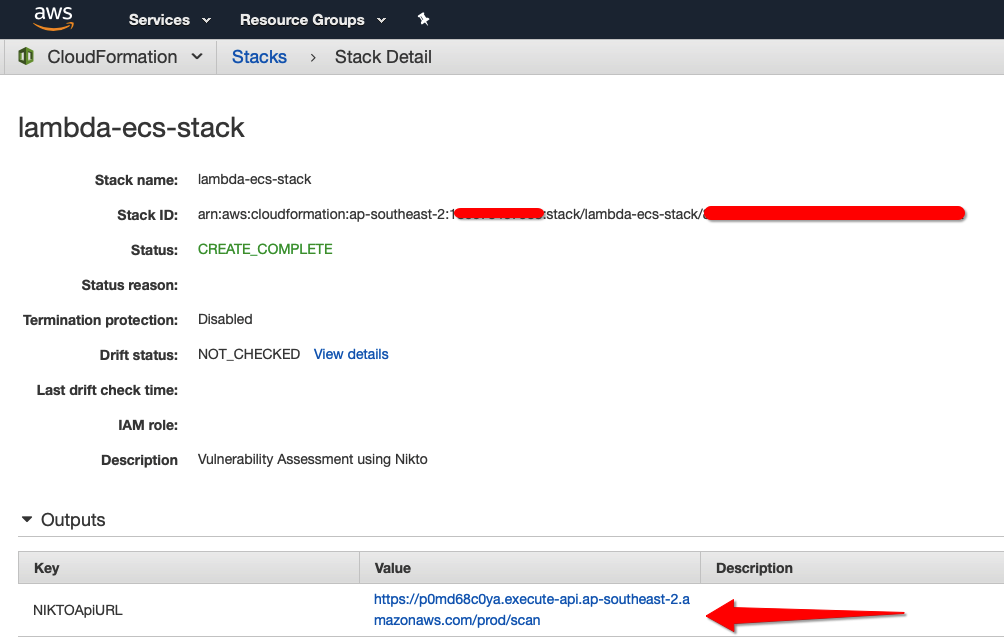

- CloudFormation – All components will be deployed using CloudFormation. We will create a stack of all the resources and use this to manage our deployment. The CloudFormation stack we create will have one parameter which is the pre-provisioned SES Email address and once output, the API gateway endpoint we will use to perform our scans

Putting it all together, the below image demonstrates our topology using CloudFormation. It shows each service and how it is linked to other services.

This article is focusing on the AWS services that can be leveraged to assist us in performing automated vulnerability assessments. It is also a blueprint for other tasks you may choose that involve leveraging ECS to perform various tasks that need compute on demand. Whilst I have simply chosen k0st/alpine-nikto as the docker image from Docker Hub, you can choose an image to your liking and customise the inputs to suit your needs.

I have also used one big monolithic CloudFormation template for this exercise. I have done this on purpose to show that this entire platform so to speak, can be run from a simple text file, demonstrating Infrastructure-as-a-Code. You can use multiple CloudFormation templates and Stack sets for your deployment, the aim here is to demonstrate capability and not which way is right or wrong.

Below, I will show each section of the template based on the services outlined above and then demonstrate the deployment. At the bottom of this article I will show the entire template which I will put onto GitHub and wrap some documentation around it (eventually…).

Firstly, our template is written in YAML. Below we define the template version required for any CloudFormation template, our SAM transform set (change set needed to leverage SAM within our template), input parameter for the SES email address and an output which will give us the API URL endpoint to connect to:

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Description: Vulnerability Assessment using Nikto

Parameters:

SESEmail:

Type: String

Outputs:

NIKTOApiURL:

Value: !Sub https://${NIKTOApiGateway}.execute-api.${AWS::Region}.amazonaws.com/prod/scan

The rest of the document our our stack resources. I will start off with defining the IAM roles we need for our stack. We will create 4 roles:

- NIKTOFunctionRole: The Nikto Lambda function role which will have permission to execute the lambda code and launch the ECS task

- NIKTOApiGatewayRole: This role is the API Gateway role that will have the permission to call the Lambda scan function

- NIKTOExecutionRole: The ECS task execution role to execute the ECS container tasks

- SESFunctionRole: This role will allow us to call the second Lambda function defined above that will keep dumping the CloudWatch logs into DynamoDB and then send them to us using SES

NIKTOFunctionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: Lambda

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: ECS

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'ecs:RunTask'

Resource:

- !Ref NIKTOECSTaskDefinition

- PolicyName: IAM

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'iam:PassRole'

Resource:

- !GetAtt NIKTOExecutionRole.Arn

NIKTOApiGatewayRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: apigateway.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: InvokeLambda

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- lambda:InvokeFunction

Resource:

- !GetAtt NIKTOFunction.Arn

NIKTOExecutionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: ECS

Principal:

Service:

- ecs-tasks.amazonaws.com

Action:

- 'sts:AssumeRole'

SESFunctionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: Lambda

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: SES

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'ses:SendEmail'

Resource: '*'

- PolicyName: DynamoDB

PolicyDocument:

Statement:

Effect: Allow

Action:

- 'dynamodb:Get*'

- 'dynamodb:Query'

- 'dynamodb:Delete*'

- 'dynamodb:Update*'

- 'dynamodb:PutItem'

- 'dynamodb:Scan*'

Resource: !GetAtt NIKTOTable.ArnNext we will define our ECS resource, i.e. the ECS Cluster and the ECS Task Definition which will instantiate the containers. For the ECS containers, we have specified some compute parameters, the Docker Registry image to use (k0st/alpine-nikto) and the log specification which will simply dump all its output to CloudWatch within a specific log group that we will define later on.

NIKTOCluster:

Type: 'AWS::ECS::Cluster'

Properties:

ClusterName: nikto-lambda

NIKTOECSTaskDefinition:

Type: 'AWS::ECS::TaskDefinition'

Properties:

Cpu: 512

Memory: 2GB

NetworkMode: awsvpc

RequiresCompatibilities:

- FARGATE

ExecutionRoleArn: !GetAtt NIKTOExecutionRole.Arn

ContainerDefinitions:

- Name: nikto

Image: k0st/alpine-nikto

LogConfiguration:

LogDriver: awslogs

Options:

awslogs-group: !Ref NIKTOLogGroup

awslogs-region: !Sub ${AWS::Region}

awslogs-stream-prefix: niktoNow we will define our two functions. I have embedded the Python code inline, you would generally do this by leveraging S3 and uploading a zip file of the serverless Lambda function however I a just keeping it simple. Summary of each function is as follows:

- NIKTOFunction – The first function will take a Host to scan (either DNS name or IP address) which we will use to perform the vulnerability assessment and a port number to perform the scan on (if it is SSL, we need to set an “SSL” flag). As this is written in Python, I am using boto3 library which will give us a set of classes we can easily integrate our code with AWS services. The code will call the run_task method which will call the Fargate ECS task that will perform the scan

- SESFunction – The second Lambda function which will take the CloudWatch logs as they come and dump them into a DynamoDB table we have defined as “NIKTOTable“. Once the scan has been detected as complete, the function will send us an email with the scan results

NIKTOFunction:

Type: 'AWS::Serverless::Function'

Properties:

Handler: index.handler

Runtime: python3.6

InlineCode: |

# Lambda function code. Takes in 3 mandaatory parameters via RestApi:

# NIKTOHost - The host you wish to scan

# NIKTOPort - The port to scan

# ssl - True or False to set the SSL flag

# ECS image based on k0st/alpine-nikto from DockerHub

import os

import boto3

import logging

import json

logger = logging.getLogger()

logger.setLevel(logging.INFO)

os.environ['AWS_DEFAULT_REGION'] = os.environ['AWSREGION']

def handler(event, context):

logger.info(json.dumps(event, indent=2))

#print("Received event: " + json.dumps(event, indent=2))

body = json.loads(event['body'])

logger.info(body)

if body['ssl'] == "true":

command = [ "-host", body['NIKTOHost'], "-port", body['NIKTOPort'] , "-ssl"]

else:

command = [ "-host", body['NIKTOHost'], "-port", body['NIKTOPort'] ]

try:

client = boto3.client('ecs')

response = client.run_task(

cluster=os.environ['NIKTOCluster'],

taskDefinition=os.environ['NIKTOECSTaskArn'],

count=1,

launchType='FARGATE',

overrides={

'containerOverrides': [

{

'name': 'nikto',

'command': command

}

]

},

networkConfiguration={

'awsvpcConfiguration': {

'subnets': [

os.environ['NIKTOSubnet'],

],

'securityGroups': [

os.environ['NIKTOSecGroup'],

],

'assignPublicIp': 'ENABLED'

}

}

)

logger.info(response)

return {

"statusCode": 200

}

except:

return {

"statusCode": 501

}

Role: !GetAtt NIKTOFunctionRole.Arn

Environment:

Variables:

NIKTOCluster: !GetAtt NIKTOCluster.Arn

NIKTOECSTaskArn: !Ref NIKTOECSTaskDefinition

NIKTOSubnet: !Ref NIKTOSubnet

NIKTOSecGroup: !Ref NIKTOSG

AWSREGION: !Sub ${AWS::Region}

SESFunction:

Type: 'AWS::Serverless::Function'

Properties:

Handler: index.handler

Runtime: python3.6

InlineCode: |

import gzip

import json

import base64

import boto3

import os

import logging

from boto3.dynamodb.conditions import Key

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def handler(event, context):

logger.info(json.dumps(event, indent=2))

print(f'Logging Event: {event}')

print(f"Awslog: {event['awslogs']}")

cw_data = event['awslogs']['data']

print(f'data: {cw_data}')

print(f'type: {type(cw_data)}')

sendemail = 0

compressed_payload = base64.b64decode(cw_data)

uncompressed_payload = gzip.decompress(compressed_payload)

payload = json.loads(uncompressed_payload)

log_events = payload['logEvents']

scan_data = ""

streamName = payload['logStream']

# Let's dump the logs into DynamoDB

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table(os.environ['NIKTOTable'])

for event in payload['logEvents']:

table.put_item(

Item={

'streamName': streamName,

'timestamp': event['timestamp'],

'id': event['id'],

'message': event['message']

}

)

# Let's check if the NIKTO Scan completed, if so set the sendemail flag

if "host(s) tested" in event['message']:

sendemail = 1

response=""

if sendemail == 1:

scan_data = ""

fe = Key('streamName').eq(streamName)

pe = "#msg"

ean = { "#msg": "message", }

esk = None

# Need to set AWS region to us-east-1 for SES

NIKTOLogs = table.scan(

FilterExpression=fe,

ProjectionExpression=pe,

ExpressionAttributeNames=ean

)

logger.info(json.dumps(NIKTOLogs['Items']))

for i in NIKTOLogs['Items']:

scan_data += str(i['message']) + "\n"

os.environ['AWS_DEFAULT_REGION'] = 'us-east-1'

client = boto3.client('ses' )

response = client.send_email(

Destination={

'ToAddresses': [ os.environ['SESEmail'] ]

},

Message={

'Body': {

'Text': {

'Charset': 'UTF-8',

'Data': scan_data,

},

},

'Subject': {

'Charset': 'UTF-8',

'Data': "NIKTO Logging Event",

},

},

Source=os.environ['SESEmail']

)

logger.info(response)

Role: !GetAtt SESFunctionRole.Arn

Environment:

Variables:

SESEmail: !Ref SESEmail

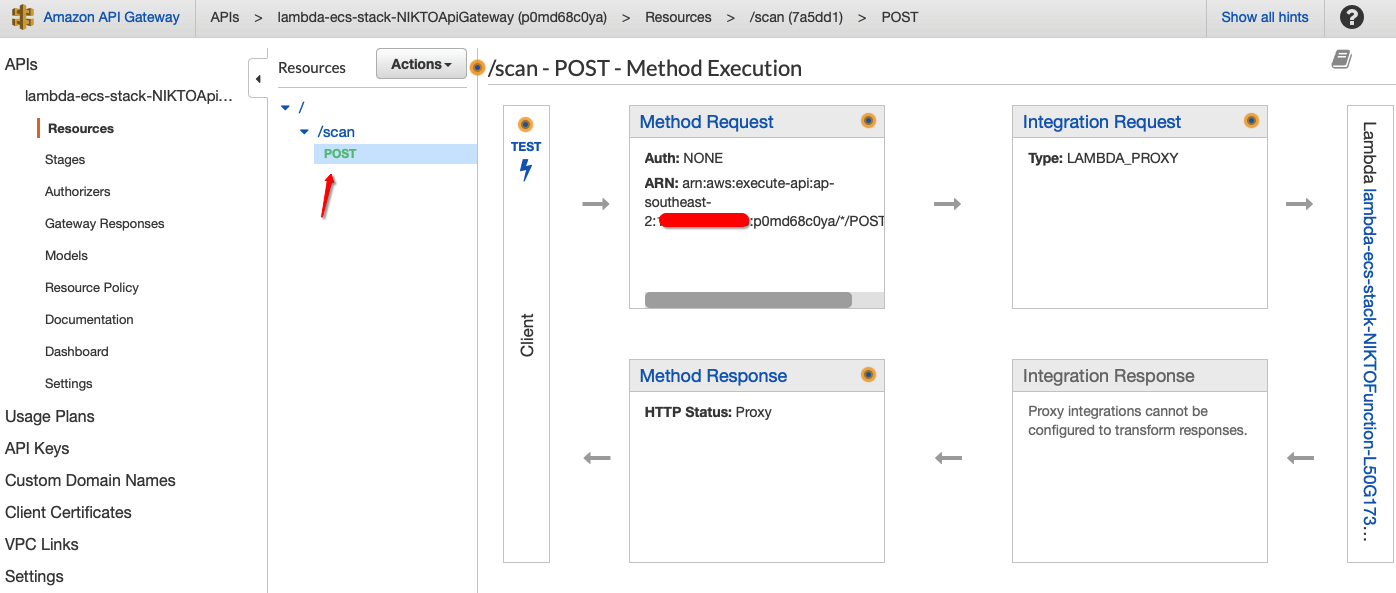

NIKTOTable: !Ref NIKTOTableNext we will define the API Gateway. Again, I have chosen to define the body of the gateway inline within the template using a swagger definition. Swagger is an open specification we can use to specify the parameters for our API such as the path (we are using /scan) and the method (HTTP POST). The API will simply pass the JSON input posted to the API to the NIKTOFunction Lambda function as is. You will notice above in our function we are extracting:

- NIKTOHost – IP or DNS name of the host to scan

- NITKOPort – The TCP port, most likely will be 80 or 443

- ssl – Flag to set if it is going to be an SSL port (443)

After it is defined, we need to specify a deployment to be able to call the API. We are deploying it here to prod:

NIKTOApiGateway:

Type: AWS::ApiGateway::RestApi

Properties:

Name: !Sub "${AWS::StackName}-NIKTOApiGateway"

Description: !Sub "${AWS::StackName}-NIKTOApiGateway"

FailOnWarnings: true

Body:

swagger: 2.0

info:

description: !Sub "${AWS::StackName}-NIKTOApiGateway"

version: 1.0

basePath: /

schemes:

- https

consumes:

- application/json

produces:

- application/json

paths:

/scan:

post:

description: !Sub "${AWS::StackName}-NIKTOApiGateway"

x-amazon-apigateway-integration:

responses:

default:

statusCode: '200'

uri: !Sub "arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${NIKTOFunction.Arn}/invocations"

credentials: !GetAtt NIKTOApiGatewayRole.Arn

passthroughBehavior: when_no_match

httpMethod: POST

type: aws_proxy

requestTemplates:

"application/json": |

{

"body" : $input.json('$')

}

operationId: postScan

NIKTOGatewayDeployment:

Type: AWS::ApiGateway::Deployment

Properties:

RestApiId: !Ref NIKTOApiGateway

StageName: prodThe above will produce a RestApi endpoint for the API Gateway which is “unauthenticated“. Basically any anonymous user whom knows the URL will be able to call the function (and potentially put you out of pocket). In a real production scenario you would define either an API Key (https://docs.aws.amazon.com/apigateway/latest/developerguide/api-gateway-setup-api-key-with-console.html) or let Lambda handle access through as authorizer within the API definition using “x-amazon-apigateway-authorizer” extension. Further details can be found here – https://docs.aws.amazon.com/apigateway/latest/developerguide/api-gateway-swagger-extensions-authorizer.html.

There are a number of ways you can configure the output of the scan results to be captured. I would actually recommend something like dumping the output to S3 or another target. However, to keep this exercise simple and use a ready made image form the Docker Registry, I have opted to CloudWatch which is a built-in capability within ECS (as defined earlier when we specified the AWS log driver). Below we do 3 main things within our template:

- Define a log group for which ECS tasks can log output to (NIKTOLogGroup)

- Define a subscription flyer which will basically send logs sent to our CloudWatch logs above in real time to the second Lambda function defined earlier

- Give permission for CloudWatch to call the Lambda function once log are being received

NIKTOLogGroup:

Type: "AWS::Logs::LogGroup"

Properties:

RetentionInDays: 1

LogsSubscriptionFilter:

Type: AWS::Logs::SubscriptionFilter

Properties:

LogGroupName: !Ref NIKTOLogGroup

FilterPattern: ""

DestinationArn: !GetAtt SESFunction.Arn

LambdaInvokePermission:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt SESFunction.Arn

Action: 'lambda:InvokeFunction'

Principal: !Sub "logs.${AWS::Region}.amazonaws.com"

SourceAccount: !Ref 'AWS::AccountId'

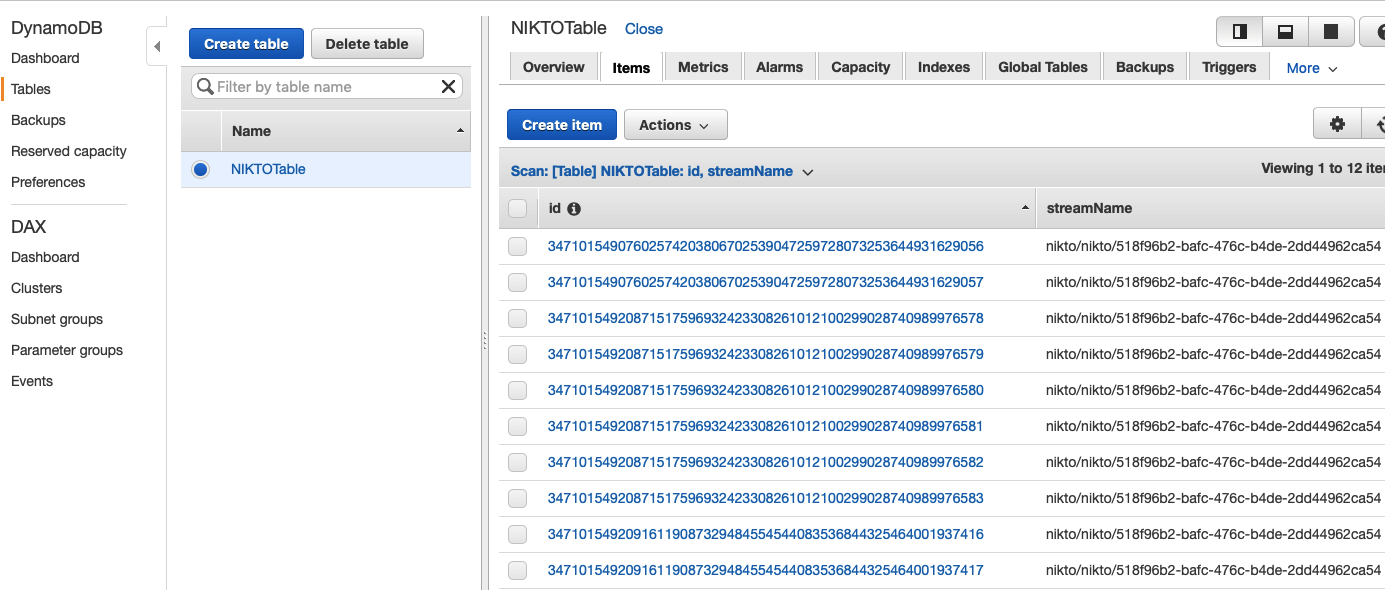

SourceArn: !GetAtt NIKTOLogGroup.ArnAs mentioned earlier, we will dump our logs get dumped into a DynamoDB table. The following is the definition of the DynamoDB table named NIKTOTable which will hold the event log records from CloudWatch. Each CloudWatch log entry had a unique “id” which we will use as the unique attribute to identify log items.

NIKTOTable:

Type: AWS::DynamoDB::Table

Description: NIKTO Scan Records Table

Properties:

TableName: "NIKTOTable"

AttributeDefinitions:

- AttributeName: "id"

AttributeType: "S"

- AttributeName: "streamName"

AttributeType: "S"

KeySchema:

- AttributeName: "id"

KeyType: "HASH"

- AttributeName: "streamName"

KeyType: "RANGE"

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

The final part of our deployment will be to define the network components to enable the ECS tasks to perform our scans. This includes the following AWS VPC constructs:

- NIKTOVPC – The underlying VPC for the deployment

- NIKTOSubnet – The subnet which the ECS containers will be a member of

- NIKTOSubnetAssociation – This is needed to associate the subnet with a route table. If we don’t define this, the default route table that the VPC creates will be used which will result in the inability to route to the Internet from our subnet.

- NIKTOIGW & NIKTOAttachGateway – The Internet Gateway for the VPC

- NIKTORouteTable & NIKTORoute – The underlying route table for the VPC. We will define a default route to use our Internet Gateway

- NIKTOSG – The security group for the VPC for the ECS container filtering

NIKTOVPC:

Type: 'AWS::EC2::VPC'

Properties:

CidrBlock: 192.168.0.0/24

NIKTOSubnet:

Type: 'AWS::EC2::Subnet'

Properties:

CidrBlock: 192.168.0.0/24

VpcId: !Ref NIKTOVPC

NIKTOSubnetAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref NIKTORouteTable

SubnetId: !Ref NIKTOSubnet

NIKTOIGW:

Type: 'AWS::EC2::InternetGateway'

NIKTORouteTable:

Type: 'AWS::EC2::RouteTable'

Properties:

VpcId: !Ref NIKTOVPC

NIKTORoute:

Type: 'AWS::EC2::Route'

DependsOn: NIKTOIGW

Properties:

RouteTableId: !Ref NIKTORouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref NIKTOIGW

NIKTOAttachGateway:

Type: 'AWS::EC2::VPCGatewayAttachment'

Properties:

VpcId: !Ref NIKTOVPC

InternetGatewayId: !Ref NIKTOIGW

NIKTOSG:

Type: 'AWS::EC2::SecurityGroup'

Properties:

GroupDescription: Nikto SG

SecurityGroupIngress:

- IpProtocol: -1

CidrIp: 127.0.0.1/32

VpcId: !Ref NIKTOVPC

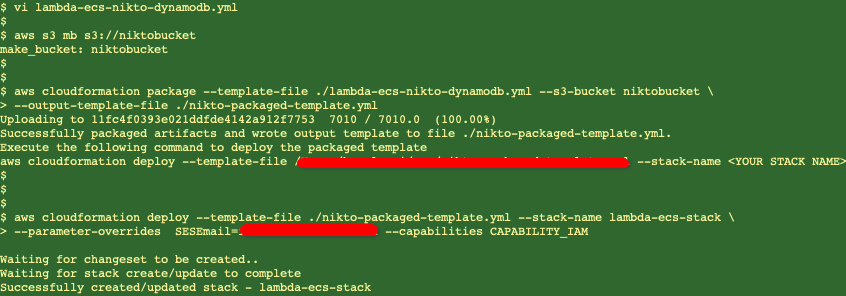

Now that we have all the components ready to go, we can create the CloudFormation stack. As mentioned earlier, we will just dump all the above into a single YAML file to deploy our stack. I will use the AWS CLI to perform the deployment. The deployment will be made by:

- Create an S3 bucket to package the deployment.

- Package the deployment. The file name we will use that holds all the content above (i.e. our CloudFormation stack) is lambda-ecs-nikto-dynamodb.yml. The output is a packaged deployment file we will use to create our stack named nikto-packaged-template.yml

- Deploy the stack using the packaged deployment file. We will name the stack lambda-ecs-stack. I have masked the SES verified email address, you will need to define your own

From the shell prompt, run the following commands:

aws s3 mb s3://niktobucket

aws cloudformation package --template-file ./lambda-ecs-nikto-dynamodb.yml --s3-bucket niktobucket --output-template-file ./nikto-packaged-template.yml

aws cloudformation deploy --template-file ./nikto-packaged-template.yml --stack-name lambda-ecs-stack --parameter-overrides SESEmail=<YOUR SES VERIFIED EMAIL ADDRESS> --capabilities CAPABILITY_IAM

API Gateway:

Lambda Functions:

Let’s have a look at the CloudFormation stack and get the output of the API URL to launch a Nikto Scan:

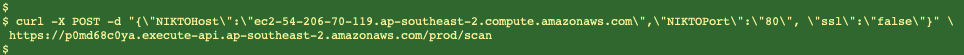

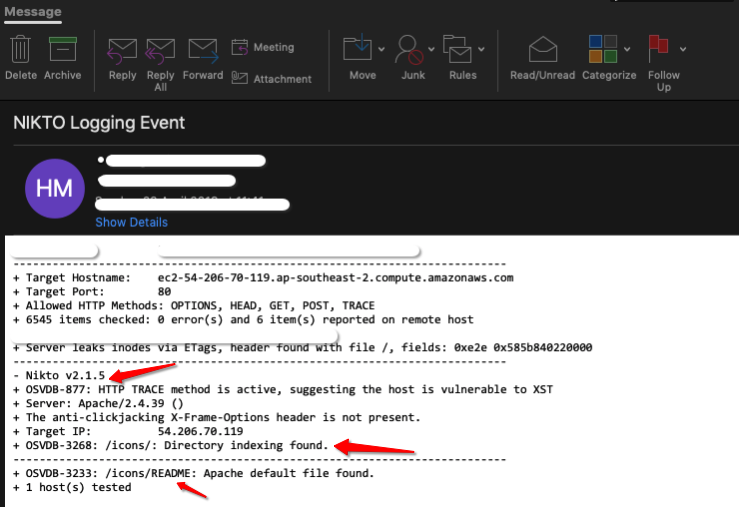

Next, let’s test the API and perform a Nikto scan. I have created a simple EC2 instance to perform a simple scan against. The aim is to test functionality of this deployment hence I have not instantiated a vulnerable web server. We should be able to get enough output to test that the deployment works as expected.

To launch the API, we will use “curl” command sending some JSON body to the API Gateway endpoint. You would further automate this task to create definitions for your systems. You could potentially leverage CloudWatch Events for this task that would use a cron job to launch the scans over a certain interval.

curl -X POST -d "{\"NIKTOHost\":\"ec2-54-206-70-119.ap-southeast-2.compute.amazonaws.com\",\"NIKTOPort\":\"80\", \"ssl\":\"false\"}" https://p0md68c0ya.execute-api.ap-southeast-2.amazonaws.com/prod/scan

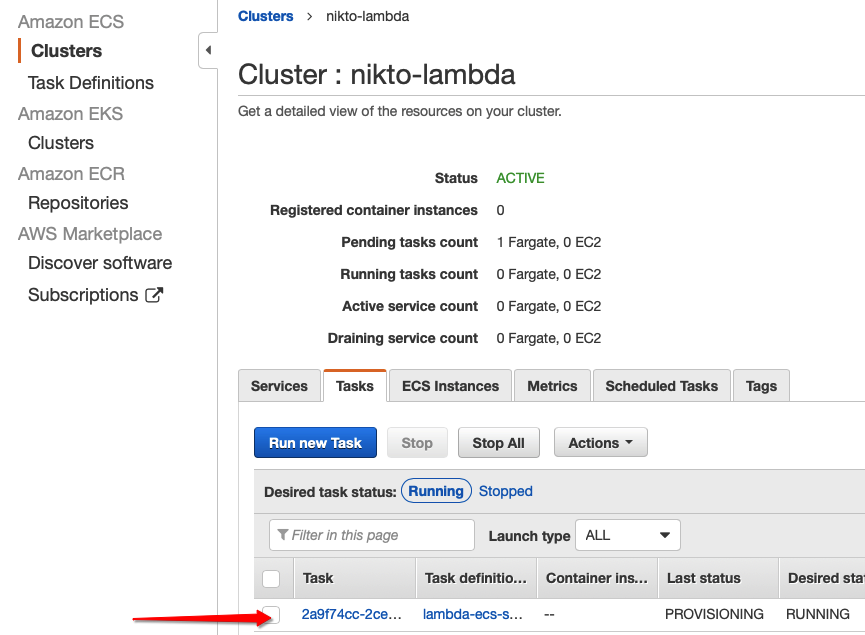

Let’s have a look at ECS. You will see a “niko-lambda” cluster has been created as per the stack and we have an ECS task now running and provisioning our container instance to run the scan.

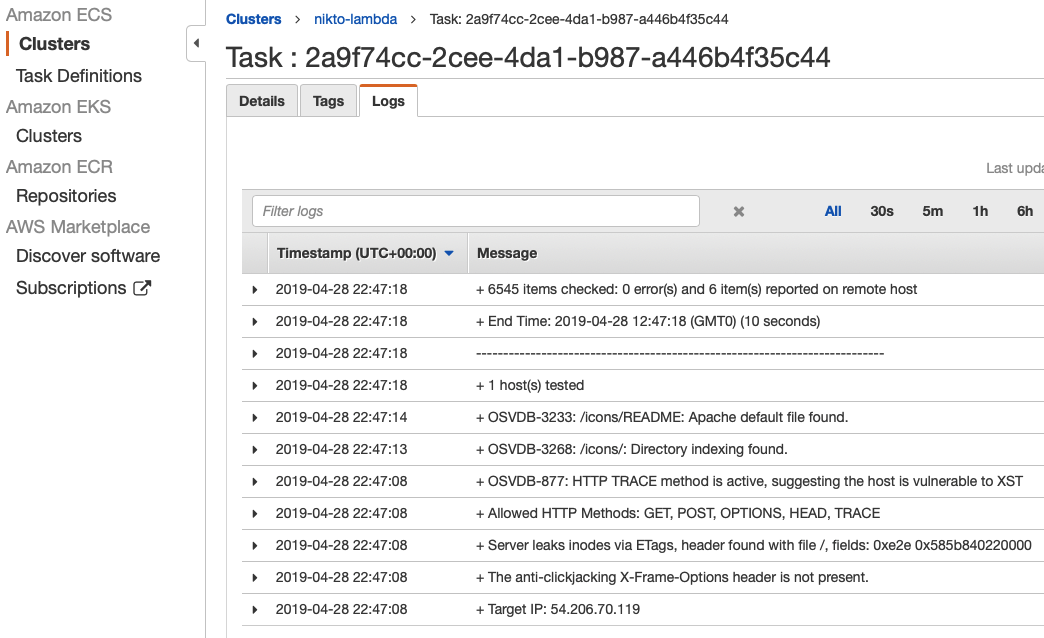

Whilst the scan is running, we can see the log data being generated from the container:

As per our SESFuntion defined earlier, we are dumping the log data into a DynamoDB table which should look like this:

And now we can see that the email has successfully been delivered upon completion. You will note the OSVDB items that have been flagged which you will need to attend to as part of your proactive security practice in addressing vulnerabilities.

One mistake I did was not properly configure the DynamoDB scan to get the correct order, hence some of the line items are out of whack. However, this is good enough for now as it demonstrates what we are capable of doing by leveraging AWS Lambda, ECS, API Gateway, CloudWatch and SES to assist us in performing vulnerability assessments.

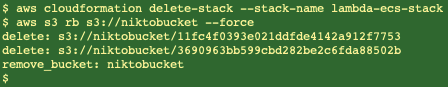

Now let’s blow the stack away:

aws cloudformation delete-stack --stack-name lambda-ecs-stack

aws s3 rb s3://niktobucket --force

The complete YAML file is available below for reference:

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Description: Vulnerability Assessment using Nikto

Parameters:

SESEmail:

Type: String

Outputs:

NIKTOApiURL:

Value: !Sub https://${NIKTOApiGateway}.execute-api.${AWS::Region}.amazonaws.com/prod/scan

Resources:

NIKTOFunctionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: Lambda

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: ECS

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'ecs:RunTask'

Resource:

- !Ref NIKTOECSTaskDefinition

- PolicyName: IAM

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'iam:PassRole'

Resource:

- !GetAtt NIKTOExecutionRole.Arn

NIKTOApiGatewayRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: apigateway.amazonaws.com

Action: sts:AssumeRole

Policies:

- PolicyName: InvokeLambda

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- lambda:InvokeFunction

Resource:

- !GetAtt NIKTOFunction.Arn

NIKTOExecutionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: ECS

Principal:

Service:

- ecs-tasks.amazonaws.com

Action:

- 'sts:AssumeRole'

SESFunctionRole:

Type: 'AWS::IAM::Role'

Properties:

ManagedPolicyArns:

- 'arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole'

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Sid: Lambda

Principal:

Service:

- lambda.amazonaws.com

Action:

- 'sts:AssumeRole'

Policies:

- PolicyName: SES

PolicyDocument:

Statement:

- Effect: Allow

Action:

- 'ses:SendEmail'

Resource: '*'

- PolicyName: DynamoDB

PolicyDocument:

Statement:

Effect: Allow

Action:

- 'dynamodb:Get*'

- 'dynamodb:Query'

- 'dynamodb:Delete*'

- 'dynamodb:Update*'

- 'dynamodb:Scan*'

- 'dynamodb:PutItem'

Resource: !GetAtt NIKTOTable.Arn

NIKTOCluster:

Type: 'AWS::ECS::Cluster'

Properties:

ClusterName: nikto-lambda

NIKTOECSTaskDefinition:

Type: 'AWS::ECS::TaskDefinition'

Properties:

Cpu: 512

Memory: 2GB

NetworkMode: awsvpc

RequiresCompatibilities:

- FARGATE

ExecutionRoleArn: !GetAtt NIKTOExecutionRole.Arn

ContainerDefinitions:

- Name: nikto

Image: k0st/alpine-nikto

LogConfiguration:

LogDriver: awslogs

Options:

awslogs-group: !Ref NIKTOLogGroup

awslogs-region: !Sub ${AWS::Region}

awslogs-stream-prefix: nikto

NIKTOFunction:

Type: 'AWS::Serverless::Function'

Properties:

Handler: index.handler

Runtime: python3.6

InlineCode: |

# Lambda function code. Takes in 3 mandaatory parameters via RestApi:

# NIKTOHost - The host you wish to scan

# NIKTOPort - The port to scan

# ssl - True or False to set the SSL flag

# ECS image based on k0st/alpine-nikto from DockerHub

import os

import boto3

import logging

import json

logger = logging.getLogger()

logger.setLevel(logging.INFO)

os.environ['AWS_DEFAULT_REGION'] = os.environ['AWSREGION']

def handler(event, context):

logger.info(json.dumps(event, indent=2))

#print("Received event: " + json.dumps(event, indent=2))

body = json.loads(event['body'])

logger.info(body)

if body['ssl'] == "true":

command = [ "-host", body['NIKTOHost'], "-port", body['NIKTOPort'] , "-ssl"]

else:

command = [ "-host", body['NIKTOHost'], "-port", body['NIKTOPort'] ]

try:

client = boto3.client('ecs')

response = client.run_task(

cluster=os.environ['NIKTOCluster'],

taskDefinition=os.environ['NIKTOECSTaskArn'],

count=1,

launchType='FARGATE',

overrides={

'containerOverrides': [

{

'name': 'nikto',

'command': command

}

]

},

networkConfiguration={

'awsvpcConfiguration': {

'subnets': [

os.environ['NIKTOSubnet'],

],

'securityGroups': [

os.environ['NIKTOSecGroup'],

],

'assignPublicIp': 'ENABLED'

}

}

)

logger.info(response)

return {

"statusCode": 200

}

except:

return {

"statusCode": 501

}

Role: !GetAtt NIKTOFunctionRole.Arn

Environment:

Variables:

NIKTOCluster: !GetAtt NIKTOCluster.Arn

NIKTOECSTaskArn: !Ref NIKTOECSTaskDefinition

NIKTOSubnet: !Ref NIKTOSubnet

NIKTOSecGroup: !Ref NIKTOSG

AWSREGION: !Sub ${AWS::Region}

SESFunction:

Type: 'AWS::Serverless::Function'

Properties:

Handler: index.handler

Runtime: python3.6

InlineCode: |

import gzip

import json

import base64

import boto3

import os

import logging

from boto3.dynamodb.conditions import Key

logger = logging.getLogger()

logger.setLevel(logging.INFO)

def handler(event, context):

logger.info(json.dumps(event, indent=2))

print(f'Logging Event: {event}')

print(f"Awslog: {event['awslogs']}")

cw_data = event['awslogs']['data']

print(f'data: {cw_data}')

print(f'type: {type(cw_data)}')

sendemail = 0

compressed_payload = base64.b64decode(cw_data)

uncompressed_payload = gzip.decompress(compressed_payload)

payload = json.loads(uncompressed_payload)

log_events = payload['logEvents']

scan_data = ""

streamName = payload['logStream']

# Let's dump the logs into DynamoDB

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table(os.environ['NIKTOTable'])

for event in payload['logEvents']:

table.put_item(

Item={

'streamName': streamName,

'timestamp': event['timestamp'],

'id': event['id'],

'message': event['message']

}

)

# Let's check if the NIKTO Scan completed, if so set the sendemail flag

if "host(s) tested" in event['message']:

sendemail = 1

response=""

if sendemail == 1:

scan_data = ""

fe = Key('streamName').eq(streamName)

pe = "#msg"

ean = { "#msg": "message", }

esk = None

# Need to set AWS region to us-east-1 for SES

NIKTOLogs = table.scan(

FilterExpression=fe,

ProjectionExpression=pe,

ExpressionAttributeNames=ean

)

logger.info(json.dumps(NIKTOLogs['Items']))

for i in NIKTOLogs['Items']:

scan_data += str(i['message']) + "\n"

os.environ['AWS_DEFAULT_REGION'] = 'us-east-1'

client = boto3.client('ses' )

response = client.send_email(

Destination={

'ToAddresses': [ os.environ['SESEmail'] ]

},

Message={

'Body': {

'Text': {

'Charset': 'UTF-8',

'Data': scan_data,

},

},

'Subject': {

'Charset': 'UTF-8',

'Data': "NIKTO Logging Event",

},

},

Source=os.environ['SESEmail']

)

logger.info(response)

Role: !GetAtt SESFunctionRole.Arn

Environment:

Variables:

SESEmail: !Ref SESEmail

NIKTOTable: !Ref NIKTOTable

NIKTOApiGateway:

Type: AWS::ApiGateway::RestApi

Properties:

Name: !Sub "${AWS::StackName}-NIKTOApiGateway"

Description: !Sub "${AWS::StackName}-NIKTOApiGateway"

FailOnWarnings: true

Body:

swagger: 2.0

info:

description: !Sub "${AWS::StackName}-NIKTOApiGateway"

version: 1.0

basePath: /

schemes:

- https

consumes:

- application/json

produces:

- application/json

paths:

/scan:

post:

description: !Sub "${AWS::StackName}-NIKTOApiGateway"

x-amazon-apigateway-integration:

responses:

default:

statusCode: '200'

uri: !Sub "arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${NIKTOFunction.Arn}/invocations"

credentials: !GetAtt NIKTOApiGatewayRole.Arn

passthroughBehavior: when_no_match

httpMethod: POST

type: aws_proxy

requestTemplates:

"application/json": |

{

"body" : $input.json('$')

}

operationId: postScan

NIKTOGatewayDeployment:

Type: AWS::ApiGateway::Deployment

Properties:

RestApiId: !Ref NIKTOApiGateway

StageName: prod

NIKTOLogGroup:

Type: "AWS::Logs::LogGroup"

Properties:

RetentionInDays: 1

LogsSubscriptionFilter:

Type: AWS::Logs::SubscriptionFilter

Properties:

LogGroupName: !Ref NIKTOLogGroup

FilterPattern: ""

DestinationArn: !GetAtt SESFunction.Arn

LambdaInvokePermission:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt SESFunction.Arn

Action: 'lambda:InvokeFunction'

Principal: !Sub "logs.${AWS::Region}.amazonaws.com"

SourceAccount: !Ref 'AWS::AccountId'

SourceArn: !GetAtt NIKTOLogGroup.Arn

NIKTOTable:

Type: AWS::DynamoDB::Table

Description: NIKTO Scan Records Table

Properties:

TableName: "NIKTOTable"

AttributeDefinitions:

- AttributeName: "id"

AttributeType: "S"

- AttributeName: "streamName"

AttributeType: "S"

KeySchema:

- AttributeName: "id"

KeyType: "HASH"

- AttributeName: "streamName"

KeyType: "RANGE"

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

NIKTOVPC:

Type: 'AWS::EC2::VPC'

Properties:

CidrBlock: 192.168.0.0/24

NIKTOSubnet:

Type: 'AWS::EC2::Subnet'

Properties:

CidrBlock: 192.168.0.0/24

VpcId: !Ref NIKTOVPC

NIKTOSubnetAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref NIKTORouteTable

SubnetId: !Ref NIKTOSubnet

NIKTOIGW:

Type: 'AWS::EC2::InternetGateway'

NIKTORouteTable:

Type: 'AWS::EC2::RouteTable'

Properties:

VpcId: !Ref NIKTOVPC

NIKTORoute:

Type: 'AWS::EC2::Route'

DependsOn: NIKTOIGW

Properties:

RouteTableId: !Ref NIKTORouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref NIKTOIGW

NIKTOAttachGateway:

Type: 'AWS::EC2::VPCGatewayAttachment'

Properties:

VpcId: !Ref NIKTOVPC

InternetGatewayId: !Ref NIKTOIGW

NIKTOSG:

Type: 'AWS::EC2::SecurityGroup'

Properties:

GroupDescription: Nikto SG

SecurityGroupIngress:

- IpProtocol: -1

CidrIp: 127.0.0.1/32

VpcId: !Ref NIKTOVPC